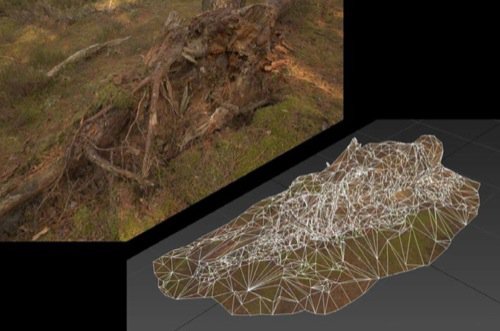

More images are generally better, but too many makes the matching process exponentially slower. a work space or bookshelves.Īim for around 50-80 photos of your subject from different positions, heights and angles. You need a static, well-lit, visually busy or cluttered space, e.g. Likewise, transparent objects and moving subjects are unlikely to be picked up. When choosing your subject, be aware large expanses of blank space (e.g. You need a good set of images with enough matches to create a point cloud. The camera metadata is then used to calculate and plot the location of those points in three dimensional (x,y,z) space. Photogrammetry software works by detecting matching points across multiple images.

This is probably the most important step in the whole process. This workflow uses the following freely accessible applications: Regard3d, MeshLab, and Unity. a desk space, or bookcase), a digital camera or smartphone, a Mac with minimum 4GB RAM (larger point clouds require 8GB RAM) and an OpenGL capable graphics card. Here, we share our workflow for transforming batches of photos into 3d models using a Mac.

PHOTOGRAMETRY UNITY 2019 MAC OS

To make things more complicated, we’re using a 2019 MacBook Pro with an AMD Radeon 5500M GPU, running Mac OS Catalina. MeshRoom), but most of them are based on CUDA, and therefore require a NVIDIA GeForce graphics card. There are a few free photogrammetry options available (e.g. We wanted to find a way to create point clouds from smartphone camera images to lower barriers to participating in the project. While LiDAR scanners are able to produce high resolution point clouds, their cost is prohibitive for non-specialist users. These interiors represent individual spaces and experiences of lockdown. One of our current projects with artist, Nisha Duggal, involves stitching together point clouds of interior spaces to create an ‘impossible architecture’.

PHOTOGRAMETRY UNITY 2019 HOW TO

How to turn your smartphone photos into 3d digital models

0 kommentar(er)

0 kommentar(er)